#serverless printing solutions

Explore tagged Tumblr posts

Text

Go Green with Serverless Printing Innovation

Serverless printing solutions leverage cloud technology to bring efficiency and flexibility to your printing infrastructure. Unlike traditional setups that require dedicated servers and complex network configurations, serverless printing relies on cloud services to handle the printing process. This approach offers several key advantages:

Cost-Efficiency: With serverless printing, there's no need to invest in and maintain expensive hardware or dedicated servers.

Scalability: As your printing needs grow, serverless solutions seamlessly scale to accommodate increased demand.

Simplified Management: Serverless printing solutions shift the responsibilities to the cloud provider, allowing your IT team to focus on more strategic tasks.

Accessibility: This is especially beneficial for remote or distributed teams, enabling them to print documents without being physically connected to the office network.

Reduced Environmental Impact: By embracing a paperless or "less-paper" approach through serverless printing, businesses contribute to reducing paper waste and their carbon footprint.

Enhanced Security: Cloud providers invest heavily in securing their platforms, often offering advanced security features such as encryption and access controls.

In conclusion, serverless printing solutions mark a significant advancement in modernizing printing infrastructures. They empower businesses to achieve cost savings, scalability, and streamlined management, all while contributing to a more sustainable future.

0 notes

Text

Deploying SQLite for Local Data Storage in Industrial IoT Solutions

Introduction

In Industrial IoT (IIoT) applications, efficient data storage is critical for real-time monitoring, decision-making, and historical analysis. While cloud-based storage solutions offer scalability, local storage is often required for real-time processing, network independence, and data redundancy. SQLite, a lightweight yet powerful database, is an ideal choice for edge computing devices like ARMxy, offering reliability and efficiency in industrial environments.

Why Use SQLite for Industrial IoT?

SQLite is a self-contained, serverless database engine that is widely used in embedded systems. Its advantages include:

Lightweight & Fast: Requires minimal system resources, making it ideal for ARM-based edge gateways.

No Server Dependency: Operates as a standalone database, eliminating the need for complex database management.

Reliable Storage: Supports atomic transactions, ensuring data integrity even in cases of power failures.

Easy Integration: Compatible with various programming languages and industrial protocols.

Setting Up SQLite on ARMxy

To deploy SQLite on an ARMxy Edge IoT Gateway, follow these steps:

1. Installing SQLite

Most Linux distributions for ARM-based devices include SQLite in their package manager. Install it with:

sudo apt update

sudo apt install sqlite3

Verify the installation:

sqlite3 --version

2. Creating and Managing a Database

To create a new database:

sqlite3 iiot_data.db

Create a table for sensor data storage:

CREATE TABLE sensor_data (

id INTEGER PRIMARY KEY AUTOINCREMENT,

timestamp DATETIME DEFAULT CURRENT_TIMESTAMP,

sensor_id TEXT,

value REAL

);

Insert sample data:

INSERT INTO sensor_data (sensor_id, value) VALUES ('temperature_01', 25.6);

Retrieve stored data:

SELECT * FROM sensor_data;

3. Integrating SQLite with IIoT Applications

ARMxy devices can use SQLite with programming languages like Python for real-time data collection and processing. For instance, using Python’s sqlite3 module:

import sqlite3

conn = sqlite3.connect('iiot_data.db')

cursor = conn.cursor()

cursor.execute("INSERT INTO sensor_data (sensor_id, value) VALUES (?, ?)", ("pressure_01", 101.3))

conn.commit()

cursor.execute("SELECT * FROM sensor_data")

rows = cursor.fetchall()

for row in rows:

print(row)

conn.close()

Use Cases for SQLite in Industrial IoT

Predictive Maintenance: Store historical machine data to detect anomalies and schedule maintenance.

Energy Monitoring: Log real-time power consumption data to optimize usage and reduce costs.

Production Line Tracking: Maintain local records of manufacturing process data for compliance and quality control.

Remote Sensor Logging: Cache sensor readings when network connectivity is unavailable and sync with the cloud later.

Conclusion

SQLite is a robust, lightweight solution for local data storage in Industrial IoT environments. When deployed on ARMxy Edge IoT Gateways, it enhances real-time processing, improves data reliability, and reduces cloud dependency. By integrating SQLite into IIoT applications, industries can achieve better efficiency and resilience in data-driven operations.

0 notes

Text

hp printer support

hp printer support Between high-profile ransomware attacks and recurrent knowledge breaches, enterprise knowledge security is within the headlines like ne'er before. meaning it is also at the front of people's minds like ne'er before. Keeping knowledge safe has quickly become a high priority for any organization that is involved regarding the privacy of its own data similarly as its public name.

The importance of the print surroundings all told this hasn't gone unmarked. in line with a 2019 report from Quocirca, about to common fraction of organizations rank print jointly of their high 5 security risks. And over three-quarters of them area unit boosting their disbursal on network printing solutions that provide options like secure printing so as to assist minimize knowledge loss.

In most print environments, you will find 3 common vulnerabilities:

1. Central points of attack: With ancient print infrastructures like print servers, you've one device handling printing for a complete pool of users. From a print security position, that is simply a similar as swing all of your eggs in one basket. Hackers WHO wish access to a fashionable pot of information will simply target the task queues or caches on the print servers.

Furthermore, print servers' single points of failure prohibit print availableness. once the server goes offline, thus will printing.

2. written document exposure: skunk prints a private email, gets distracted and forgets to choose it up. Then somebody else finds that written email sitting within the printer's output receptacle and simply happens to browse it, leading to ample embarrassment.

We've all seen one thing like that happen. perhaps we've been guilty of it ourselves. And what is worse, generally it's over simply a private email. It can be personal company memos or documents description serious 60 minutes shake-ups or classified product.

3. Lack of unified oversight: this may embody everything from unpatched server code and superannuated drivers to workers having the ability to print virtually something, together with confidential documents, while not risk of detection. while not the tools for comprehensive oversight, admins have restricted ability to stay tabs on the state of the print surroundings and what is happening across it.

If print security is thus vital, what is the holdup? With such a large amount of organizations in danger from one or a lot of of those vulnerabilities, you'd suppose that they'd be ramping up print security while not skipping a beat. however exaggerated security does not essentially return fast or simple.

For example, if you decide on to implement secure printing, you will need some reasonably secure unleash mechanism. that usually involves rolling out a lot of infrastructure at a time once everyone's making an attempt to seek out infrastructure reduction solutions.

There's conjointly the lingering issue with print servers. Unless you ditch your print servers permanently, there is not any thanks to absolutely eliminate problems with single points of attack. you keep your single points of failure, too, that makes high-availability printing more durable to realize.

Print security with reduced infrastructure and high availableness printersupport is exclusive among network printing solutions as a result of it helps you to have print security while not sacrificing high-availability printing and conjointly reduces infrastructure. It combines stable, simple direct-IP printing with centralized management to make a strong, secure serverless infrastructure.

So what will that seem like on the ground?

Eliminate print servers: printersupport internet Stack (on-prem) and printersupport SaaS (formerly PrinterCloud) offer full-featured printing with zero want for print servers—even in giant and distributed organizations. By obtaining obviate print servers, you take away all of their security and availableness shortcomings in one fell swoop. Experience full oversight: A centralized admin console helps you to manage and monitor each side of the print surroundings intuitively from one pane of glass. The common driver repository makes it simple to stay drivers consistent and up to this point across the organization. Audit print activity: printersupport's elective print auditing module will mechanically generate reports and alerts. that permits admins to stay tabs on print activity and shine a lightweight on antecedently dark corners of the print surroundings. Implement easy-to-use secure printing: wish secure printing that does not rag your finish users? Among many alternative unleash mechanisms, PrinterLogic offers a Print unleash App for iOS and golem. With the app, finish users will firmly execute unfinished print jobs right from their smartphones. higher still, it does not need any additional infrastructure. And if you've Associate in Nursing existing badge or card system, great. printersupport will integrate seamlessly therewith too. With printersupport, you get the enterprise-grade security your organization must defend its print knowledge. however it does not stop therewith. you furthermore may get infrastructure reduction and high-availability printing as well.

Just consider however things went down with EPIC Management (read the case study here). Secure unleash printing is notoriously powerful to implement in attention organizations. And yet, hp printer support, EPIC Management was able to produce a solid chain of custody for its protected health data (PHI) whereas conjointly simplifying its print surroundings.

To see what it's wish to have print security with all the benefits and none of the trade offs, sign in for a Printer Logic demo nowadays. you'll be able to check it in your own print surroundings freed from charge for thirty days.

Quick Live Support

1 note

·

View note

Text

Appstudio xerox

APPSTUDIO XEROX ANDROID

APPSTUDIO XEROX SOFTWARE

APPSTUDIO XEROX PC

2 The first-of-its-kind solution lets users scan a document through their MFP, snap a photo from their phone or upload it to a web portal.

The new Xerox Easy Translation Service app helps partners and companies capitalize on the $38 billion market for language services and technology.

1ĬonnectKey-enabled i-Series MFPs adapt to the way businesses work – on-the-go, virtually and through the cloud – allowing companies to meet today’s business trends, challenges and opportunities: Xerox was recently recognized by research firm IDC for its ConnectKey-enabled MFP solutions.

APPSTUDIO XEROX SOFTWARE

MFPs built on the ConnectKey platform – a combination of technology and software – provide a critical advantage to small and medium-sized businesses and enterprises. It allows businesses to go beyond printing, scanning, faxing and copying, and gives channel partners the tools they need to capture new recurring revenue streams.” “The ConnectKey-enabled i-Series MFPs provide those services and more. “Imagine an MFP that can translate a document into more than 35 languages, or be customized and built to meet customers’ particular business needs,” said Jim Rise, senior vice president, Office and Solutions Business Group, Xerox. The 14 Xerox ConnectKey-enabled i-Series MFPs are equipped with ready-to-use apps to speed up paper-dependent business processes and make it easier for users to collaborate and work more effectively. Xerox today announced it’s expanding what multifunction printers (MFPs) can do in the workplace through advancements to its ConnectKey ® Technology.

DocuShare Enterprise Content Management.

APPSTUDIO XEROX ANDROID

You’ll be directed to the Apple or Android app store to download the free Xerox ® Mobile Print App by scanning the QR code from your smart phone.Mobile Print Links: Shows how Apps can integrate with other technology such as Xerox Mobile Print using QR codes. Quick Scan to Email: Offers you a simplified email experience as an example of removing repetitive steps within a scan workflow. This example allows you to print brochures, posters and calenders from. Quick Print: Shows the ability to print files from a hosted site without the need for drivers. 3 What ConnectKey Demo Apps are available? Info App: This application shows the ability to share or communicate useful information to users. They can be used to compliment existing solutions or used to introduce customers to new workflows. 2 Do ConnectKey Apps replace traditional Xerox ® Extensible Interface Platform ® (EIP) solutions? No, ConnectKey Apps do not replace traditional solutions. Overview Question Answer 1 What is a ConnectKey App? ConnectKey Apps are small, installable software applications designed for Xerox ® ConnectKey MFPs. Instead, ConnectKey Apps are lightweight, serverless solutions you can download and run from the ConnectKey multifunction printer.

APPSTUDIO XEROX PC

Unlike traditional software, ConnectKey Apps do not require a dedicated server, PC or IT resource. Like traditional software solutions, ConnectKey Apps extend the capabilities of the multifunction printer and maximize the hardware investment. To help simplify work, we’ve created a new category of solutions: Xerox ® ConnectKey Apps. Xerox ® ConnectKey ® Apps and Xerox ® App Studio FAQ Xerox ® ConnectKey ® Apps and Xerox ® App Studio Frequently Asked Questions ConnectKey Apps.

0 notes

Text

Migrate an application from using GridFS to using Amazon S3 and Amazon DocumentDB (with MongoDB compatibility)

In many database applications there arises a need to store large objects, such as files, along with application data. A common approach is to store these files inside the database itself, despite the fact that a database isn’t the architecturally best choice for storing large objects. Primarily, because file system APIs are relatively basic (such as list, get, put, and delete), a fully-featured database management system, with its complex query operators, is overkill for this use case. Additionally, large objects compete for resources in an OLTP system, which can negatively impact query workloads. Moreover, purpose-built file systems are often far more cost-effective for this use case than using a database, in terms of storage costs as well as computing costs to support the file system. The natural alternative to storing files in a database is on a purpose-built file system or object store, such as Amazon Simple Storage Service (Amazon S3). You can use Amazon S3 as the location to store files or binary objects (such as PDF files, image files, and large XML documents) that are stored and retrieved as a whole. Amazon S3 provides a serverless service with built-in durability, scalability, and security. You can pair this with a database that stores the metadata for the object along with the Amazon S3 reference. This way, you can query the metadata via the database APIs, and retrieve the file via the Amazon S3 reference stored along with the metadata. Using Amazon S3 and Amazon DocumentDB (with MongoDB compatibility) in this fashion is a common pattern. GridFS is a file system that has been implemented on top of the MongoDB NoSQL database. In this post, I demonstrate how to replace the GridFS file system with Amazon S3. GridFS provides some nonstandard extensions to the typical file system (such as adding searchable metadata for the files) with MongoDB-like APIs, and I further demonstrate how to use Amazon S3 and Amazon DocumentDB to handle these additional use cases. Solution overview For this post, I start with some basic operations against a GridFS file system set up on a MongoDB instance. I demonstrate operations using the Python driver, pymongo, but the same operations exist in other MongoDB client drivers. I use an Amazon Elastic Compute Cloud (Amazon EC2) instance that has MongoDB installed; I log in to this instance and use Python to connect locally. To demonstrate how this can be done with AWS services, I use Amazon S3 and an Amazon DocumentDB cluster for the more advanced use cases. I also use AWS Secrets Manager to store the credentials for logging into Amazon DocumentDB. An AWS CloudFormation template is provided to provision the necessary components. It deploys the following resources: A VPC with three private and one public subnets An Amazon DocumentDB cluster An EC2 instance with the MongoDB tools installed and running A secret in Secrets Manager to store the database credentials Security groups to allow the EC2 instance to communicate with the Amazon DocumentDB cluster The only prerequisite for this template is an EC2 key pair for logging into the EC2 instance. For more information, see Create or import a key pair. The following diagram illustrates the components in the template. This CloudFormation template incurs costs, and you should consult the relevant pricing pages before launching it. Initial setup First, launch the CloudFormation stack using the template. For more information on how to do this via the AWS CloudFormation console or the AWS Command Line Interface (AWS CLI), see Working with stacks. Provide the following inputs for the CloudFormation template: Stack name Instance type for the Amazon DocumentDB cluster (default is db.r5.large) Master username for the Amazon DocumentDB cluster Master password for the Amazon DocumentDB cluster EC2 instance type for the MongoDB database and the machine to use for this example (default: m5.large) EC2 key pair to use to access the EC2 instance SSH location to allow access to the EC2 instance Username to use with MongoDB Password to use with MongoDB After the stack has completed provisioning, I log in to the EC2 instance using my key pair. The hostname for the EC2 instance is reported in the ClientEC2InstancePublicDNS output from the CloudFormation stack. For more information, see Connect to your Linux instance. I use a few simple files for these examples. After I log in to the EC2 instance, I create five sample files as follows: cd /home/ec2-user echo Hello World! > /home/ec2-user/hello.txt echo Bye World! > /home/ec2-user/bye.txt echo Goodbye World! > /home/ec2-user/goodbye.txt echo Bye Bye World! > /home/ec2-user/byebye.txt echo So Long World! > /home/ec2-user/solong.txt Basic operations with GridFS In this section, I walk through some basic operations using GridFS against the MongoDB database running on the EC2 instance. All the following commands for this demonstration are available in a single Python script. Before using it, make sure to replace the username and password to access the MongoDB database with the ones you provided when launching the CloudFormation stack. I use the Python shell. To start the Python shell, run the following code: $ python3 Python 3.7.9 (default, Aug 27 2020, 21:59:41) [GCC 7.3.1 20180712 (Red Hat 7.3.1-9)] on linux Type "help", "copyright", "credits" or "license" for more information. >>> Next, we import a few packages we need: >>> import pymongo >>> import gridfs Next, we connect to the local MongoDB database and create the GridFS object. The CloudFormation template created a MongoDB username and password based on the parameters entered when launching the stack. For this example, I use labdb for the username and labdbpwd for the password, but you should replace those with the parameter values you provided. We use the gridfs database to store the GridFS data and metadata: >>> mongo_client = pymongo.MongoClient(host="localhost") >>> mongo_client["admin"].authenticate(name="labdb", password="labdbpwd") Now that we have connected to MongoDB, we create a few objects. The first, db, represents the MongoDB database we use for our GridFS, namely gridfs. Next, we create a GridFS file system object, fs, that we use to perform GridFS operations. This GridFS object takes as an argument the MongoDB database object that was just created. >>> db = mongo_client.gridfs >>> fs = gridfs.GridFS(db) Now that this setup is complete, list the files in the GridFS file system: >>> print(fs.list()) [] We can see that there are no files in the file system. Next, insert one of the files we created earlier: >>> h = fs.put(open("/home/ec2-user/hello.txt", "rb").read(), filename="hello.txt") This put command returns an ObjectId that identifies the file that was just inserted. I save this ObjectID in the variable h. We can show the value of h as follows: >>> h ObjectId('601b1da5fd4a6815e34d65f5') Now when you list the files, you see the file we just inserted: >>> print(fs.list()) ['hello.txt'] Insert another file that you created earlier and list the files: >>> b = fs.put(open("/home/ec2-user/bye.txt", "rb").read(), filename="bye.txt") >>> print(fs.list()) ['bye.txt', 'hello.txt'] Read the first file you inserted. One way to read the file is by the ObjectId: >>> print(fs.get(h).read()) b'Hello World!n' GridFS also allows searching for files, for example by filename: >>> res = fs.find({"filename": "hello.txt"}) >>> print(res.count()) 1 We can see one file with the name hello.txt. The result is a cursor to iterate over the files that were returned. To get the first file, call the next() method: >>> res0 = res.next() >>> res0.read() b'Hello World!n' Next, delete the hello.txt file. To do this, use the ObjectId of the res0 file object, which is accessible via the _id field: >>> fs.delete(res0._id) >>> print(fs.list()) ['bye.txt'] Only one file is now in the file system. Next, overwrite the bye.txt file with different data, in this case the goodbye.txt file contents: >>> hb = fs.put(open("/home/ec2-user/goodbye.txt", "rb").read(), filename="bye.txt") >>> print(fs.list()) ['bye.txt'] This overwrite doesn’t actually delete the previous version. GridFS is a versioned file system and keeps older versions unless you specifically delete them. So, when we find the files based on the bye.txt, we see two files: >>> res = fs.find({"filename": "bye.txt"}) >>> print(res.count()) 2 GridFS allows us to get specific versions of the file, via the get_version() method. By default, this returns the most recent version. Versions are numbered in a one-up counted way, starting at 0. So we can access the original version by specifying version 0. We can also access the most recent version by specifying version -1. First, the default, most recent version: >>> x = fs.get_version(filename="bye.txt") >>> print(x.read()) b'Goodbye World!n' Next, the first version: >>> x0 = fs.get_version(filename="bye.txt", version=0) >>> print(x0.read()) b'Bye World!n' The following code is the second version: >>> x1 = fs.get_version(filename="bye.txt", version=1) >>> print(x1.read()) b'Goodbye World!n' The following code is the latest version, which is the same as not providing a version, as we saw earlier: >>> xlatest = fs.get_version(filename="bye.txt", version=-1) >>> print(xlatest.read()) b'Goodbye World!n' An interesting feature of GridFS is the ability to attach metadata to the files. The API allows for adding any keys and values as part of the put() operation. In the following code, we add a key-value pair with the key somekey and the value somevalue: >>> bb = fs.put(open("/home/ec2-user/byebye.txt", "rb").read(), filename="bye.txt", somekey="somevalue") >>> c = fs.get_version(filename="bye.txt") >>> print(c.read()) b'Bye Bye World!n' We can access the custom metadata as a field of the file: >>> print(c.somekey) somevalue Now that we have the metadata attached to the file, we can search for files with specific metadata: >>> sk0 = fs.find({"somekey": "somevalue"}).next() We can retrieve the value for the key somekey from the following result: >>> print(sk0.somekey) somevalue We can also return multiple documents via this approach. In the following code, we insert another file with the somekey attribute, and then we can see that two files have the somekey attribute defined: >>> h = fs.put(open("/home/ec2-user/solong.txt", "rb").read(), filename="solong.txt", somekey="someothervalue", key2="value2") >>> print(fs.find({"somekey": {"$exists": True}}).count()) 2 Basic operations with Amazon S3 In this section, I show how to get the equivalent functionality of GridFS using Amazon S3. There are some subtle differences in terms of unique identifiers and the shape of the returned objects, so it’s not a drop-in replacement for GridFS. However, the major functionality of GridFS is covered by the Amazon S3 APIs. I walk through the same operations as in the previous section, except using Amazon S3 instead of GridFs. First, we create an S3 bucket to store the files. For this example, I use the bucket named blog-gridfs. You need to choose a different name for your bucket, because bucket names are globally unique. For this demonstration, we want to also enable versioning for this bucket. This allows Amazon S3 to behave similarly as GridFS with respect to versioning files. As with the previous section, the following commands are included in a single Python script, but I walk through these commands one by one. Before using the script, make sure to replace the secret name with the one created by the CloudFormation stack, as well as the Region you’re using, and the S3 bucket you created. First, we import a few packages we need: >>> import boto3 Next, we connect to Amazon S3 and create the S3 client: session = boto3.Session() s3_client = session.client('s3') It’s convenient to store the name of the bucket we created in a variable. Set the bucket variable appropriately: >>> bucket = "blog-gridfs" Now that this setup is complete, we list the files in the S3 bucket: >>> s3_client.list_objects(Bucket=bucket) {'ResponseMetadata': {'RequestId': '031B62AE7E916762', 'HostId': 'UO/3dOVHYUVYxyrEPfWgVYyc3us4+0NRQICA/mix//ZAshlAwDK5hCnZ+/wA736x5k80gVcyZ/w=', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amz-id-2': 'UO/3dOVHYUVYxyrEPfWgVYyc3us4+0NRQICA/mix//ZAshlAwDK5hCnZ+/wA736x5k80gVcyZ/w=', 'x-amz-request-id': '031B62AE7E916762', 'date': 'Wed, 03 Feb 2021 22:37:12 GMT', 'x-amz-bucket-region': 'us-east-1', 'content-type': 'application/xml', 'transfer-encoding': 'chunked', 'server': 'AmazonS3'}, 'RetryAttempts': 0}, 'IsTruncated': False, 'Marker': '', 'Name': 'blog-gridfs', 'Prefix': '', 'MaxKeys': 1000, 'EncodingType': 'url'} The output is more verbose, but we’re most interested in the Contents field, which is an array of objects. In this example, it’s absent, denoting an empty bucket. Next, insert one of the files we created earlier: >>> h = s3_client.put_object(Body=open("/home/ec2-user/hello.txt", "rb").read(), Bucket=bucket, Key="hello.txt") This put_object command takes three parameters: Body – The bytes to write Bucket – The name of the bucket to upload to Key – The file name The key can be more than just a file name, but can also include subdirectories, such as subdir/hello.txt. The put_object command returns information acknowledging the successful insertion of the file, including the VersionId: >>> h {'ResponseMetadata': {'RequestId': 'EDFD20568177DD45', 'HostId': 'sg8q9KNxa0J+4eQUMVe6Qg2XsLiTANjcA3ElYeUiJ9KGyjsOe3QWJgTwr7T3GsUHi3jmskbnw9E=', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amz-id-2': 'sg8q9KNxa0J+4eQUMVe6Qg2XsLiTANjcA3ElYeUiJ9KGyjsOe3QWJgTwr7T3GsUHi3jmskbnw9E=', 'x-amz-request-id': 'EDFD20568177DD45', 'date': 'Wed, 03 Feb 2021 22:39:19 GMT', 'x-amz-version-id': 'ADuqSQDju6BJHkw86XvBgIPKWalQMDab', 'etag': '"8ddd8be4b179a529afa5f2ffae4b9858"', 'content-length': '0', 'server': 'AmazonS3'}, 'RetryAttempts': 0}, 'ETag': '"8ddd8be4b179a529afa5f2ffae4b9858"', 'VersionId': 'ADuqSQDju6BJHkw86XvBgIPKWalQMDab'} Now if we list the files, we see the file we just inserted: >>> list = s3_client.list_objects(Bucket=bucket) >>> print([i["Key"] for i in list["Contents"]]) ['hello.txt'] Next, insert the other file we created earlier and list the files: >>> b = s3_client.put_object(Body=open("/home/ec2-user/bye.txt", "rb").read(), Bucket=bucket, Key="bye.txt") >>> print([i["Key"] for i in s3_client.list_objects(Bucket=bucket)["Contents"]]) ['bye.txt', 'hello.txt'] Read the first file. In Amazon S3, use the bucket and key to get the object. The Body field is a streaming object that can be read to retrieve the contents of the object: >>> s3_client.get_object(Bucket=bucket, Key="hello.txt")["Body"].read() b'Hello World!n' Similar to GridFS, Amazon S3 also allows you to search for files by file name. In the Amazon S3 API, you can specify a prefix that is used to match against the key for the objects: >>> print([i["Key"] for i in s3_client.list_objects(Bucket=bucket, Prefix="hello.txt")["Contents"]]) ['hello.txt'] We can see one file with the name hello.txt. Next, delete the hello.txt file. To do this, we use the bucket and file name, or key: >>> s3_client.delete_object(Bucket=bucket, Key="hello.txt") {'ResponseMetadata': {'RequestId': '56C082A6A85F5036', 'HostId': '3fXy+s1ZP7Slw5LF7oju5dl7NQZ1uXnl2lUo1xHywrhdB3tJhOaPTWNGP+hZq5571c3H02RZ8To=', 'HTTPStatusCode': 204, 'HTTPHeaders': {'x-amz-id-2': '3fXy+s1ZP7Slw5LF7oju5dl7NQZ1uXnl2lUo1xHywrhdB3tJhOaPTWNGP+hZq5571c3H02RZ8To=', 'x-amz-request-id': '56C082A6A85F5036', 'date': 'Wed, 03 Feb 2021 22:45:57 GMT', 'x-amz-version-id': 'rVpCtGLillMIc.I1Qz0PC9pomMrhEBGd', 'x-amz-delete-marker': 'true', 'server': 'AmazonS3'}, 'RetryAttempts': 0}, 'DeleteMarker': True, 'VersionId': 'rVpCtGLillMIc.I1Qz0PC9pomMrhEBGd'} >>> print([i["Key"] for i in s3_client.list_objects(Bucket=bucket)["Contents"]]) ['bye.txt'] The bucket now only contains one file. Let’s overwrite the bye.txt file with different data, in this case the goodbye.txt file contents: >>> hb = s3_client.put_object(Body=open("/home/ec2-user/goodbye.txt", "rb").read(), Bucket=bucket, Key="bye.txt") >>> print([i["Key"] for i in s3_client.list_objects(Bucket=bucket)["Contents"]]) ['bye.txt'] Similar to GridFS, with versioning turned on in Amazon S3, an overwrite doesn’t actually delete the previous version. Amazon S3 keeps older versions unless you specifically delete them. So, when we list the versions of the bye.txt object, we see two files: >>> y = s3_client.list_object_versions(Bucket=bucket, Prefix="bye.txt") >>> versions = sorted([(i["Key"],i["VersionId"],i["LastModified"]) for i in y["Versions"]], key=lambda y: y[2]) >>> print(len(versions)) 2 As with GridFS, Amazon S3 allows us to get specific versions of the file, via the get_object() method. By default, this returns the most recent version. Unlike GridFS, versions in Amazon S3 are identified with a unique identifier, VersionId, not a counter. We can get the versions of the object and sort them based on their LastModified field. We can access the original version by specifying the VersionId of the first element in the sorted list. We can also access the most recent version by not specifying a VersionId: >>> x0 = s3_client.get_object(Bucket=bucket, Key="bye.txt", VersionId=versions[0][1]) >>> print(x0["Body"].read()) b'Bye World!n' >>> x1 = s3_client.get_object(Bucket=bucket, Key="bye.txt", VersionId=versions[1][1]) >>> print(x1["Body"].read()) b'Goodbye World!n' >>> xlatest = s3_client.get_object(Bucket=bucket, Key="bye.txt") >>> print(xlatest["Body"].read()) b'Goodbye World!n' Similar to GridFS, Amazon S3 provides the ability to attach metadata to the files. The API allows for adding any keys and values as part of the Metadata field in the put_object() operation. In the following code, we add a key-value pair with the key somekey and the value somevalue: >>> bb = s3_client.put_object(Body=open("/home/ec2-user/byebye.txt", "rb").read(), Bucket=bucket, Key="bye.txt", Metadata={"somekey": "somevalue"}) >>> c = s3_client.get_object(Bucket=bucket, Key="bye.txt") We can access the custom metadata via the Metadata field: >>> print(c["Metadata"]["somekey"]) somevalue We can also print the contents of the file: >>> print(c["Body"].read()) b'Bye Bye World!n' One limitation with Amazon S3 versus GridFS is that you can’t search for objects based on the metadata. To accomplish this use case, we employ Amazon DocumentDB. Use cases with Amazon S3 and Amazon DocumentDB Some use cases may require you to find objects or files based on the metadata, beyond just the file name. For example, in an asset management use case, we may want to record the author or a list of keywords. To do this, we can use Amazon S3 and Amazon DocumentDB to provide a very similar developer experience, but leveraging the power of a purpose-built document database and a purpose-built object store. In this section, I walk through how to use these two services to cover the additional use case of needing to find files based on the metadata. First, we import a few packages: >>> import json >>> import pymongo >>> import boto3 We use the credentials that we created when we launched the CloudFormation stack. These credentials were stored in Secrets Manager. The name of the secret is the name of the stack that you used to create the stack (for this post, docdb-mongo), with -DocDBSecret appended to docdb-mongo-DocDBSecret. We assign this to a variable. You should use the appropriate Secrets Manager secret name for your stack: >>> secret_name = 'docdb-mongo-DocDBSecret' Next, we create a Secrets Manager client and retrieve the secret. Make sure to set the Region variable with the Region in which you deployed the stack: >>> secret_client = session.client(service_name='secretsmanager', region_name=region) >>> secret = json.loads(secret_client.get_secret_value(SecretId=secret_name)['SecretString']) This secret contains the four pieces of information that we need to connect to the Amazon DocumentDB cluster: Cluster endpoint Port Username Password Next we connect to the Amazon DocumentDB cluster: >>> docdb_client = pymongo.MongoClient(host=secret["host"], port=secret["port"], ssl=True, ssl_ca_certs="/home/ec2-user/rds-combined-ca-bundle.pem", replicaSet='rs0', connect = True) >>> docdb_client["admin"].authenticate(name=secret["username"], password=secret["password"]) True We use the database fs and the collection files to store our file metadata: >>> docdb_db = docdb_client["fs"] >>> docdb_coll = docdb_db["files"] Because we already have data in the S3 bucket, we create entries in the Amazon DocumentDB collection for those files. The information we store is analogous to the information in the GridFS fs.files collection, namely the following: bucket – The S3 bucket filename – The S3 key version – The S3 VersionId length – The file length in bytes uploadDate – The S3 LastModified date Additionally, any metadata that was stored with the objects in Amazon S3 is also added to the document in Amazon DocumentDB: >>> for ver in s3_client.list_object_versions(Bucket=bucket)["Versions"]: ... obj = s3_client.get_object(Bucket=bucket, Key=ver["Key"], VersionId=ver["VersionId"]) ... to_insert = {"bucket": bucket, "filename": ver["Key"], "version": ver["VersionId"], "length": obj["ContentLength"], "uploadDate": obj["LastModified"]} ... to_insert.update(obj["Metadata"]) ... docdb_coll.insert_one(to_insert) ... Now we can find files by their metadata: >>> sk0 = docdb_coll.find({"somekey": "somevalue"}).next() >>> print(sk0["somekey"]) somevalue To read the file itself, we can use the bucket, file name, and version to retrieve the object from Amazon S3: >>> print(s3_client.get_object(Bucket=sk0["bucket"], Key=sk0["filename"], VersionId=sk0["version"])["Body"].read()) b'Bye Bye World!n' Now we can put another file with additional metadata. To do this, we write the file to Amazon S3 and insert the metadata into Amazon DocumentDB: >>> h = s3_client.put_object(Body=open("/home/ec2-user/solong.txt", "rb").read(), Bucket=bucket, Key="solong.txt") >>> docdb_coll.insert_one({"bucket": bucket, "filename": "solong.txt", "version": h["VersionId"], "somekey": "someothervalue", "key2": "value2"}) Finally, we can search for files with somekey defined, as we did with GridFS, and see that two files match: >>> print(docdb_coll.find({"somekey": {"$exists": True}}).count()) 2 Clean up You can delete the resources created in this post by deleting the stack via the AWS CloudFormation console or the AWS CLI. Conclusion Storing large objects inside a database is typically not the best architectural choice. Instead, coupling a distributed object store, such as Amazon S3, with the database provides a more architecturally sound solution. Storing the metadata in the database and a reference to the location of the object in the object store allows for efficient query and retrieval operations, while reducing the strain on the database for serving object storage operations. In this post, I demonstrated how to use Amazon S3 and Amazon DocumentDB in place of MongoDB’s GridFS. I leveraged Amazon S3’s purpose-built object store and Amazon DocumentDB, a fast, scalable, highly available, and fully managed document database service that supports MongoDB workloads. For more information about recent launches and blog posts, see Amazon DocumentDB (with MongoDB compatibility) resources. About the author Brian Hess is a Senior Solution Architect Specialist for Amazon DocumentDB (with MongoDB compatibility) at AWS. He has been in the data and analytics space for over 20 years and has extensive experience with relational and NoSQL databases. https://aws.amazon.com/blogs/database/migrate-an-application-from-using-gridfs-to-using-amazon-s3-and-amazon-documentdb-with-mongodb-compatibility/

0 notes

Text

How to Know When a Cloud Computing Trend Has Come to Its End

2/5/202001:00 PM Even the best cloud computing trend must end: Why PaaS, serverless, OpenStack and multicloud have already seen their best days. -- ITPro Today We’re well over a decade into the era of cloud computing, and during that time we have seen one cloud computing trend after another. Indeed, the nature of the cloud has changed substantially in the last 10 years, and cloud-based services and strategies that were once cutting-edge are now mundane, or even approaching obsolescence. Here’s a rundown of the cloud computing trends that may be slowing or even coming to an end. Read the rest of Christopher Tozzi's article on cloud computing trends on ITPro Today.Read the rest of Christopher Tozzi's article on cloud computing trends on ITPro Today. ** [Navigating the ever-changing data center industry is no easy feat. Data Center World is where you and your team can source and explore solutions, technologies and concepts you need to plan, manage and optimize your data center. Join the IT industry at Data Center World, March 16-19, in San Antonio, TX . Using the code IW100 will grant you $100 off a conference pass. Learn More Here . ] The InformationWeek community brings together IT practitioners and industry experts with IT advice, education, and opinions. We strive to highlight technology executives and subject matter experts and use their knowledge and experiences to help our audience of IT ... View Full Bio We welcome your comments on this topic on our social media channels, or [contact us directly] with questions about the site. Email This | Print | RSS Webcasts The 6 Must-Haves for Practical Cloud Security Cloud Security Threats Enterprises Need to Watch More Webcasts White Papers The Forgotten Link Between Linux Threats & Cloud Security Black Hat 2019 Hacker Survey Report More White Papers Reports 2019 State of Privileged Access Management (PAM) Maturity Report 2019 Threat Hunting Report More Reports

0 notes

Text

Top 10 Emerging Technologies in 2019

We can’t deny the fact that numerous technological advancements and creative innovations played a significant role in our lives. The sort of life we are as of now living is a result of technological advancements. Technological innovations improved our lives and the present things are currently better quicker, simpler, and increasingly advantageous.Here are the main 10 rising technological advances of 2019, that will truly affect our lives in an unimagined way:

1. The 5G Revolution:

According to Statistia – 5G is expected to hit the market by 2020. By 2021, the number of 5G connections is forecast to reach a figure of between 20 million and 100 million. Some estimates put the figure at 200 million. The ascent of 5G systems is expanding our capacity to move, control, and break down information crosswise over remote stages. As 5G takes off more completely in the coming years, it will drive the improvement of increasingly complex applications to take care of issues and heighten development crosswise over enterprises.With the release of 5G, mobile apps are expected to function more efficiently, smoothly which in turn will boost the overall productivity of developers & users. Let’s see –

How 5G #technology is changing mobile app development landscape?

“The advancement and deployment of 5G will empower business sway at a level a couple of technologies ever have, serving remote at the speed and dormancy required for complex arrangements like driverless vehicles”. Moreover, once completely sent geologically, 5G will assist developing markets to understand the equivalent ‘speed of business’ as their full-grown partners. Arrangement suppliers that create 5G-based answers for explicit industry applications will have gainful, early-mover focal points.

2. Artificial intelligence (AI):

Artificial intelligence is now fundamentally affecting how clients interface with organizations using savvy sites and bots, and these instruments are winding up progressively commoditized and incorporated into everyday work. Here’s a look at how AI will transform enterprises?

A quick guide to choose AI for Enterprise Software Development!

The biggest effects across all the ventures—from retail to medicinal services, hospitality to financial software solutions—are felt when AI improves information security, basic leadership speed and precision, and representative output and preparing, “With increasingly fit staff, better-qualified prospective customers, progressively proficient issue resolutions, and frameworks that feed genuine information back in for future procedure and product enhancements, organizations utilizing AI technological advancements can utilize assets with far more noteworthy effectiveness. The best part is that as investment and competition broaden in the AI domain, costs are decreased.”3.

Internet Of Things (IOT)

:IoT’s new driving business changes by serving information and data are expected to improve advertising, sales increments, and Top 10 Emerging Technologies in 2019lessening costs, according to the report. The Internet-of-Things (IoT) is taking over everything. Increase customer size & decrease spend by deploying IoT application development into your business.“Everyone in the technological world, just as numerous customers, is listening to the term Internet of Things”, said by Frank Raimondi, a professional individual who works in vital channels and business advancements. In any case, to state it’s befuddling and overpowering is putting its understatement. IoT may mean numerous things to numerous individuals, yet it can unmistakably mean steady or new business to a channel accomplice on the off chance that they start including applicable IoT arrangements with their current and new clients. All the more importantly, they don’t need to begin once again without any preparation.”

4. Biometrics:

Biometrics—including the face, hand fingermarks, and retina scans—are getting to be standard strategies for checking personality. These strategies will shape the protected establishment for solutions conveyed by IT organizations pushing ahead. Cutting edge biometrics has taken the standards of security up to the ascended level. Advancing significantly in each part of unique mark filtering to voice acknowledgment, biometric frameworks are changing the way identification proof, and evaluation of individual data of people is finished. Today the cutting edge biometrics use every most recent innovation to offer total and precise biometric arrangements that can catch pictures as well as examine ongoing video information

5. Blockchain:

More associations are exploring and executing blockchain technology solutions to illuminate the expanded need to verify and oversee exchanges over the web. By definition, Blockchain is a decentralized ledger management system that stores information across a wide network of nodes (computer devices) and helps users track and view transactions that take place within a network. “Blockchain descended squashing from the pinnacle of its promotion cycle, and that is presumably generally advantageous. “Since the brilliance of oddity and stir of the majority are gone, the dynamic of workaround blockchain took a total U-turn, once more, that is advantageous.”

6. Robotics:

Robotics are computerizing routine procedures by utilizing machines to make organizations quicker, more affordable, and progressively effective that lessens manpower.The combination of artificial intelligence (AI) and industrial or collaborative robotics has the potential to change the business. AI unlocks entirely new capabilities for robots, shaping the future of enterprises, which, without AI, are rigid and unresponsive to the world around them.The potential for disruption in the industrial sector is high. Despite the fact that industrial processes are already highly automated, there are still plenty of ways in which industrial robots can be improved with the addition of AI.

7. Serverless registering:

Serverless computing enables associations to create a NoOps IT atmosphere that is robotized and abstracted from basic infrastructure, diminishing operational expenses and enabling organizations to put resources into growing new capacities that include more worth. Server figuring was new to the rundown this year, alongside applied autonomy, supplanted quantum processing, and computerization.

8. Drones Automatons:

Drone Automatons empower mechanical computerization with fewer land limitations. Open doors for improvement and coordination are high for this market. There are not many bits of innovation that energize tech lovers and the overall population quite like drones. These unmanned flying wonders touch off the minds of individuals the world over — and in all actuality, we are just starting to expose their potential. Instead of flying toys, drone innovation is being opened for down to earth utilizes in elevated information and data on the board.While automatons have been around for a couple of years, calculations and writing computer programs are just barely now beginning to get up to speed with drone abilities, exhibiting energizing conceivable outcomes —not for the following year, however the following decade.

9. Virtual Reality (VR)/Augmented Reality (AR):

Utilizing VR/AR app development blended reality, AI development solutions and sensor technological advances can enable associations to improve operational proficiency and individual efficiency, as indicated by various survey reports. Also, the two technologies have clear use cases in education. Virtual situations enable understudies to work on anything from development to trip to the medical procedure without the dangers related to genuine preparation. While expanded situations mean, data can be passed to students progressively on destinations, perils or best-practice.

10. 3D printing:

3D printing offers an answer for the low volume assembly of complex parts, just as quick local creation of hard-to-discover items. As increasingly reasonable items become accessible, open doors for this industry will keep on developing.CDN Solutions Group offers affordable and top emerging technology-based custom software development and mobile app development services . Look at our portfolio & connect with us at [email protected], get a free quote today Here or call us at +1-347-293-1799 or +61-408-989-495.

#Emerging Technologies#5G#5G Technology#Artificial intelligence#Internet Of Things#Biometrics#Blockchain#Robotics#Drones Automatons#Virtual Reality#Augmented Reality

0 notes

Photo

Create a Cron Job on AWS Lambda

Cron jobs are really useful tools in any Linux or Unix-like operating systems. They allow us to schedule scripts to be executed periodically. Their flexibility makes them ideal for repetitive tasks like backups and system cleaning, but also data fetching and data processing.

For all the good things they offer, cron jobs also have some downsides. The main one is that you need a dedicated server or a computer that runs pretty much 24/7. Most of us don't have that luxury. For those of us who don't have access to a machine like that, AWS Lambda is the perfect solution.

AWS Lambda is an event-driven, serverless computing platform that's a part of the Amazon Web Services. It’s a computing service that runs code in response to events and automatically manages the computing resources required by that code. Not only is it available to run our jobs 24/7, but it also automatically allocates the resources needed for them.

Setting up a Lambda in AWS involves more than just implementing a couple of functions and hoping they run periodically. To get them up and running, several services need to be configured first and need to work together. In this tutorial, we'll first go through all the services we'll need to set up, and then we'll implement a cron job that will fetch some updated cryptocurrency prices.

Understanding the Basics

As we said earlier, some AWS services need to work together in order for our Lambda function to work as a cron job. Let's have a look at each one of them and understand their role in the infrastructure.

S3 Bucket

An Amazon S3 bucket is a public cloud storage resource available in Amazon Web Services' (AWS) Simple Storage Service (S3), an object storage offering. Amazon S3 buckets, which are similar to file folders, store objects, which consist of data and its descriptive metadata. — TechTarget

Every Lambda function needs to be prepared as a “deployment package”. The deployment package is a .zip file consisting of the code and any dependencies that code might need. That .zip file can then be uploaded via the web console or located in an S3 bucket.

IAM Role

An IAM role is an IAM identity that you can create in your account that has specific permissions. An IAM role is similar to an IAM user, in that it is an AWS identity with permission policies that determine what the identity can and cannot do in AWS. — Amazon

We’ll need to manage permissions for our Lambda function with IAM. At the very least it should be able to write logs, so it needs access to CloudWatch Logs. This is the bare minimum and we might need other permissions for our Lambda function. For more information, the AWS Lambda permissions page has all the information needed.

CloudWatch Events Rule

CloudWatch Events support cron-like expressions, which we can use to define how often an event is created. We'll also need to make sure that we add our Lambda function as a target for those events.

Lambda Permission

Creating the events and targeting the Lambda function isn’t enough. We'll also need to make sure that the events are allowed to invoke our Lambda function. Anything that wants to invoke a Lambda function needs to have explicit permission to do that.

These are the building blocks of our AWS Lambda cron job. Now that we have an idea of all the moving parts of our job, let's see how we can implement it on AWS.

Implementing a Cron Job on AWS

A lot of the interactions we described earlier are taken care of by Amazon automatically. In a nutshell, all we need to do is to implement our service (the actual lambda function) and add rules to it (how often and how the lambda will be executed). Both permissions and roles are taken care of by Amazon; the defaults provided by Amazon are the ones we'll be using.

Lambda function

First, let's start by implementing a very simple lambda function. In the AWS dashboard, use the Find Services function to search by lambda. In the lambda console, select Create a function. At this point, we should be in Lambda > Functions > reate Function.

To get things going, let's start with a static log message. Our service will only be a print function. For this, we'll use Node.js 10x as our runtime language. Give it a function name, and on Execution Role let's stay with Create a new role with basic lambda permissions. This is a basic set of permissions on IAM that will allow us to upload logs to Amazon CloudWatch logs. Click Create Function.

Our function is now created with an IAM Role. In the code box, substitute the default code with the following:

exports.handler = async (event) => { console.log("Hello Sitepoint Reader!"); return {}; };

To check if the code is executing correctly, we can use the Test function. After giving a name to our test, it will execute the code and show its output in the Execution Result field just below our code.

If we test the code above we can see that we have no response, but in the function logs, we can see we have our message printed. This indicates that our service is running correctly so we can proceed with our cron implementation.

The post Create a Cron Job on AWS Lambda appeared first on SitePoint.

by Claudio Ribeiro via SitePoint https://ift.tt/34ZWTPf

0 notes

Text

Built With Ionic: JuntoScope

This is a guest post by Jedi Weller and his team at OpenForge. They have been pioneering new ways of designing digital solutions, integrating cross-disciplinary teams, and sharing common knowledge across technology companies in various verticals. Their work at OpenForge continues to lay the groundwork for clearer communication and enhanced transparency in the technology and startup communities.

Dev Scoping – The Final Frontier

In our industry, it’s often the case that software developers feel frustrated with the scoping process and the ‘need’ to put a ‘fixed’ number of hours on a complex feature. Developers everywhere try to estimate based on experience and past projects, but the number of variables in software development scoping can prove to be a perilous task.

This problem is exacerbated in team environments with Junior and Senior developers working together toward common solutions. This often results in Junior devs feeling ‘peer pressured’ (even unintentionally) into reducing their scopes to match other developers.

So, we built a way to help.

JuntoScope (“Together We Scope”) is a free, open-sourced, anonymous scoping tool for teams. We built it using Ionic V3, Firebase 4.12.1, a Serverless (FaaS) Architecture, and the Teamwork’s Projects API. The app is available on Google Play and the App Store.

So – let’s talk about our experience in building JuntoScope. No marketing, no bs, just straight up “here’s how it works.” After all, it’s a free tool – we don’t care if you use it or steal it, but at least buy us a beer the next time you see us 🙂

Designing The App

The JuntoScope design process was actually led by our development team, which was a fun change of pace. It was the dev team’s first time acting as the client, so we used a couple of our team’s processes to facilitate the communication between design & feature requirements.

We started by printing these iPhone printouts and then hand-drawing the flow.

We migrated these to Sketch, with the design team using Abstract for design source control. We used the Sketch Ionic UI Plugin to make design easy.

In order to keep language the same for designers and developers, we created a ‘legend’, that represents page transitions, routing, and data passthrough, and then started building!

We open-sourced the sketch file, which can be found here.

Folder Structure

We opted for a feature-oriented file structure to focus on the four distinct features in JuntoScope. We believe this will scale nicely as we build further support and features.

App

Generic app bootstrapping, auth guard, etc.

Assets

This project is simple, so we just keep the @fonts/folder in assets. In larger projects, we keep shared file assets and an organized file directory.

Environments

Stores our production vs. dev environment configuration

Features

We opted for a feature-oriented file structure, with four main features

Models

Define our data models across the app

Shared

We always include pop-ups, modals, etc. in a “shared” folder in our projects

Store

Theme

Commit Sanity

We can’t emphasize enough the importance of succinct, organized commits. Specifically, we used these tools:

Commitizen – it’s freaking amazing (but also frustrating when you first use it). Tslint – Yes, absolutely necessary. Prettier – Seriously, do you want to worry about line-wrapping?

An Intern’s Perspective – Serverless Architecture

We used this project as a great learning experience for one of our long-term interns, Claudio Del Valle, who’s been with us twice now during his term at Drexel. So, we felt it only appropriate he should get a chance to explain the architecture. Check out the code for more details.

We wanted to provide a seamless experience across all platforms, so we were looking for responsiveness and data persistence. In this case, using REST would create too much overhead and would require an overly complicated architecture. Therefore, we needed a simple way to establish a real-time connection between the moderator, participants, and our backend. I imagine some readers are already thinking WebSockets by now. We thought about it too, but we turned to Firebase instead.

As I alluded to above, WebSockets have been the popular choice for many developers who are looking for real-time, bi-directional communication between client and server. They provide excellent performance and security. Additionally, using a WebSocket-based implementation would be cheaper than using what’s sometimes called a ‘pull’ system. This is when the client-side has to ask the server for new information constantly. Instead, using WebSockets can be thought of as a ‘push’ system, where the server can emit values to the clients and vice versa.

Our project architecture could be described as serverless. This means that we use a service that manages the servers for us, namely, Firebase. It not only hosts our data, but our backend consists of an Express app hosted as Cloud Functions on Firebase. Now, had we decided to use WebSockets and wanted to remain serverless, we most likely would’ve had to use a different service to host our server where we would manage the sockets manually. Here is where we decided to remain as lean as possible by leveraging the service we were already using. Furthermore, by using a popular Angular library for Firebase, angularfire2, we were able to get the result we wanted.”

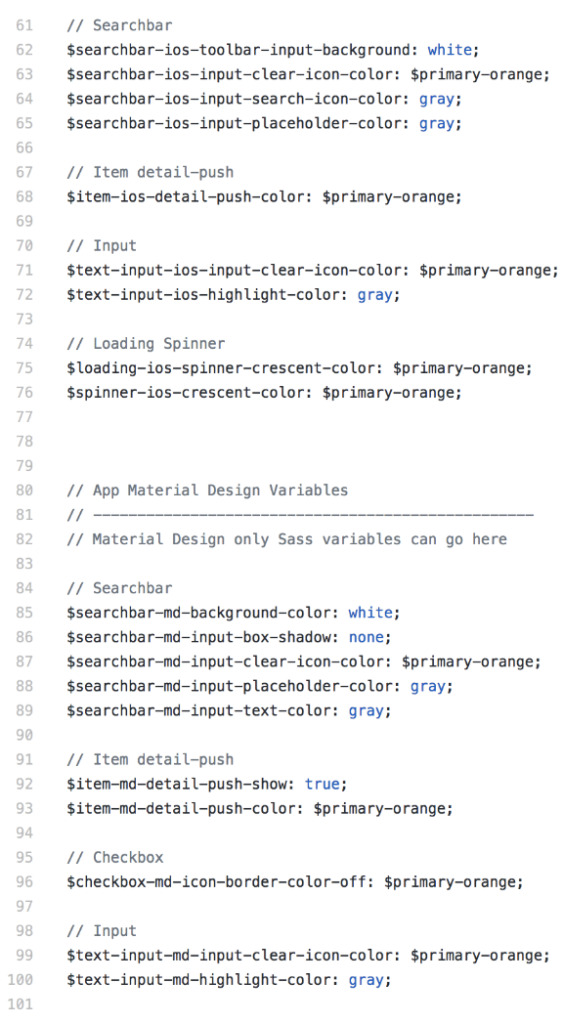

Custom Styling

Having an organized CSS and a style guide is incredibly important when creating an app. We always try to identify reusable and similar components to avoid code duplication and design inconsistency later down the line.

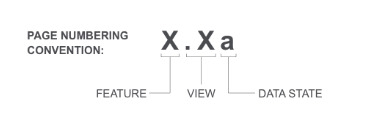

How to organize the code is a matter of preference; Ionic recommends organizing by platform. For this project, we took that one step further and kept the order of variables consistent.

For instance, our <ion-searchbar> component overrides are declared first in all 3 of our platform (iOS, Android, and Windows) sections.

We’ve also separated the responsibilities of variables.scss (framework variables) from app.scss (custom component styles) – though both are still global.

Lessons Learned – A Note From Paulina (@paulpaultweets)

You will notice at the bottom of variables.scss we import and declare our custom font called “Avenir Next”. It’s a lot of code for not a lot of value; so my recommendation is to avoid custom fonts, unless there is a quantifiable business justification for doing so. In addition, web application fonts result in very heavy load times. Keep this in mind!

As we’re building an application, I often find pieces I want to refactor and shrink down as I go along. I’ve found it’s best to backlog these items as I’m working, so that I can focus on the task at hand but still make sure the refactoring is completed.

Bugs & Challenges

The overall development and design of our Ionic V3 App took approximately 383 hours to build; we used Teamwork Projects to track billing/hours logging. Even though the scope of the project was not large (2-3 months), we had a couple of major setbacks along the way.

New Experience – Designing Error States: Since this was the first time our dev team led the design process, we missed some of our normal process that includes thinking through error states and transitions. Luckily, we were able to identify a couple holes we found in the flow through trial and error.

Change in Teamwork Authentication API: We found out on the app store submission date that Teamwork updated their API to a more oAuth based approach. Maybe we should have subscribed to those monthly newsletters earlier 😀

We had no marketing language: As previously mentioned, we never intended to market this tool – it was just something we built internally. So when all of a sudden we have questions relating to the privacy policy, TOS, or app store listing, everyone drew blanks and we had to scramble to get all these resources together.

Final Thoughts

Overall, the project was a lot of fun, and the front-end implementation, bolstered by Ionic framework v3, was incredibly straightforward to use. We were able to experiment with the Function as a Service architecture through Firebase, which was a lot of fun too.

Most importantly, this tool allows us to really speed up our internal scoping process and keep our software development scoping ACCURATE by using the team average. The team likes this process, so we’re going to keep using it.

We’d encourage anyone else using Teamwork Projects to try it out as well and let us know your thoughts. If enough people request, we have talked about adding support to tools like JIRA and Basecamp, but for now, we’re just supporting one. By the way, the team over at Teamwork.com loves what we’ve built and has agreed to give a 10% discount to the community. Just use discount code ‘JuntoScope’. Thanks for the love Teamwork <3.

This project went blazingly fast by utilizing the Ionic Framework. The entire application was rebuilt from our proof of concept using Ionic 3 in just a couple of weeks, and the performance is fantastic. We schedule 0 hours to work on performance optimizations, which is a blessing for anyone who knows how arduous performance optimization can be.

If you DO decide to use it, we’ll be sharing more information with the community about overall server load usage, costs associated with FaaS per user, and anything else valuable we can think of (except, of course, private data). The nice thing about having a real production product that’s open sourced is it gives us the opportunity to talk about trials, tribulations, and issues that come up in a production app, live as they are happening.

Stay cool, Ionic Community! @jedihacks

JuntoScope is available on Google Play and the App Store.

0 notes

Text

Representing Web Developers In The W3C

Representing Web Developers In The W3C

Rachel Andrew

2018-09-27T14:15:00+02:002018-09-27T12:57:48+00:00

One of the many things that I do is to be a part of the CSS Working Group as an Invited Expert. Invited Experts are people who the group wants to be part of the group, but who do not work for a member organization which would confer upon their membership. In this post, I explain a little bit about what I feel my role is in the Working Group, as a way to announce a possible change to my involvement with the support of the Dutch organization, Fronteers.

I’ve always seen my involvement in the CSS Working Group as a two-way thing. I ferry information from the Working Group to authors (folks who are web developers, designers, and people who use CSS for print or EPUB) and from authors to the Working Group. Once I understand a discussion that is happening around a specification which would benefit from author input, I can explain it to authors in a way that doesn’t require detailed knowledge of CSS specifications or browser internals.

This was the motivation behind all of the work I did to explain Grid Layout before it landed in browsers. It is work I continue, for example, my recent article here on Smashing Magazine on Grid Level 2 and subgrid. While I think that far more web developers are capable of understanding the specifications than they often give themselves credit for, I get that people have other priorities! If I can distill and share the most important points, then perhaps we can get more feedback into the group at a point when it can make a difference.

There is something I have discovered while constantly unpacking these subjects in articles and on stage. While I can directly ask people for their opinion — and sometimes I do — the answers to those direct questions are most often the obvious ones. People are put on the spot; they feel they should have an opinion and so give the first answer they think of. Even with they’re in an A or B choice about a subject (when asked to vote), they may not be in a place to fully consider all of the implications.

If I write or talk about a subject, however, I don’t get requests for CSS features. I get questions. Some of those I can answer and I make a note to perhaps better explain that point in future. Some of those questions I cannot answer because CSS doesn’t yet have an answer. I am constantly searching for those unanswered questions, for that is where the future of CSS is. By being a web developer who also happens to work on CSS, I’m in a perfect place to have those conversations and to try to take them back with me to the Working Group when relevant things are being discussed, and so we need to know what authors think.

Meet SmashingConf New York 2018 (Oct 23–24), focused on real challenges and real front-end solutions in the real world. From progressive web apps, Webpack and HTTP/2 to serverless, Vue.js and Nuxt — all the way to inclusive design, branding and machine learning. With Sarah Drasner, Sara Soueidan and many other speakers.

Check all topics and speakers ↬

To do this sort of work, you need to be able to explain things well and to have a nerdy interest in specifications. I’m not the only person on the planet who has these attributes. However, to do this sort of work as an Invited Expert to the CSS Working Group requires something else; it requires you to give up a lot of your time and be able to spend a lot of your own money. There is no funding for Invited Experts. A W3C Invited Expert is a volunteer, attending weekly meetings, traveling for in-person meetings, spending time responding to issues on GitHub, chatting to authors, or even editing specifications and writing tests. This is all volunteer work. As an independent — sat at a CSS Working Group meeting — I know that while practically every other person sat around that table is being paid to be there — as they work for a browser vendor or another company with an interest — I’m not. You have to care very deeply, and have a very understanding family for that to be at all sustainable.

It is this practical point which makes it hard for there to be more people like me involved in this kind of work — in the way that I’m involved — as an independent voice for authors. To actually be paid to work on this stuff usually means becoming employed by a browser vendor, and while there is nothing wrong with that it changes the dynamic. I would then be Rachel Andrew from Microsoft/Google/Mozilla. Who would I be speaking for? Could I remain embedded in the web community if I was no longer a web developer myself? It’s for this reason I was very interested when representatives from Fronteers approached me earlier this year.

Fronteers are an amazing organization of Dutch web developers. One of my first international speaking engagements was to go to Amsterdam to speak at one of their meetups. I was immediately struck by the hugely knowledgeable community in Amsterdam. If I am invited to speak at a front-end event in the Netherlands, I know I can take my nerdiest and most detailed talks along with me; the community there will already know the basics and be excited to hear the details.

Anneke Sinnema (Chair of Fronteers) and Peter-Paul Koch (Founder) approached me with an idea they had about their organization becoming a Member of the W3C, which would then entitle them to representation within the W3C. They wanted to know if I would be interested in becoming their first representative — a move that would make me an official representative for the web development community as well as give me a stipend in order that I would have some paid hours covered to do that work. This plan needs to be voted upon my Fronteers members, so may or may not come to fruition. However, we all hope it will, and not just for me but as a possible start to a movement which sees more people like myself involved in the work of creating the web platform.

My post is one of a few being published today to announce this as an idea. For more information on the thoughts behind this idea, read “Web Developer Representation In W3C Is Here” on A List Apart. Dutch speakers can also find a post on the Fronteers blog.

(il)

0 notes

Text

Asked and Answered:

Robotic process automation (RPA) lets businesses quickly transition analog, human-led activities into automated digital actions.

What differentiates RPA from tools that have complementary or overlapping capabilities is the ability to implement computer-initiated actions that would typically require human intervention. Examples of such actions include pulling information from an unstructured document, using a visual interface to enter or query data, extract information from printed forms, or evaluate voice input. Without this capability, digital automation is not possible and, therefore, neither are the benefits associated with such automation.

RPA is democratizing automation—empowering employees and increasing efficiency within organizations. But the implementation of RPA processes within a business can be difficult—and often there are alternatives that are more cost-effective and better aligned with business needs.

In this report, we lay out the questions and considerations an organization needs to examine before choosing RPA integration. We look at:

Situations best suited to RPA deployment

Where alternative solutions might be considered

How RPA compares to low-code tools

Pricing

Security

Staff expertise

Can RPA Support the Entire Enterprise, Or Only Front-Office Automation?

RPA has an advantage through visual and low-code development capabilities that enables citizen developers and non-IT staff to automate routine front-office tasks. And IT teams can easily write automation functions for back-office integration in high-level programming languages. Moreover, today’s serverless platforms and PaaS vendors allow developers to focus on the specific integration task while leveraging cloud services.

This means IT can respond very quickly to basic API-based application integration tasks, leveraging low-cost platforms and services. In contrast, most RPA platforms incur additional license overhead on a per-bot basis to scale and operate bots in parallel.

Back-office integration will typically see transaction ratios of 30:1 or higher when compared to front-office integration, so coding the integration becomes much more economical.

What Problems Could RPA Solve?

Here are some of the key use cases for RPA:

Assisted Data Capture – RPA tools can review the information being entered into a form in real time and assess for accuracy. They can also pull information from other systems to fill in fields, and enable new mobile and voice-based systems by taking care of detailed form entry within the bot.

Assisted Customer Service – RPA tools can monitor message boards, email boxes, social media, and other sources where customers may be seeking assistance or raising concerns about products and services. This capability is significantly enhanced in those that have democratized AI with access to natural language processing facilities to go beyond common terms and infer context.

Business Process Outsourcing (BPO) – Many RPA tools can help analyze multiple input sources and either immediately address the need, for example to pay a claim, or place the request into an exception-handling queue to be addressed by a human. This significantly reduces the number of humans required to process large volumes of transactions daily.

Can RPA Tools Replace iPaaS and Associated Integration Tools?

RPA can automate many functions, but if the goal is significant data remapping and restructuring, iPaaS tools will provide a better overall solution.

iPaaS platforms tend to be licensed on a server basis whereas RPA tools are usually e licensed on a per-bot basis, allowing iPaaS tools to scale in a more cost-effective manner than RPA.

However, if transaction volumes are low, or if the automation is a combination of both front-office and back-office tasks—for example, pulling data off the web and updating a CRM or ERP application via an API—the business may benefit from the simplicity of having RPA complete the entire task.

Can RPA Tools Provide Low-Code Capabilities?

Today���s RPA tools often overlap with low-code tools; however, RPA tools typically do not address user experience and are used to create headless services or “bots,” whereas low-code tools focus on web and mobile applications development.

Some RPA tools allow you to augment the visual workflow with traditional programming. This capability can be very useful for handling some complex tasks. However, it also opens a can of worms regarding long-term support for the bot.

If you need to build a complex task, your business would best be served by developing it as a separate microservice and then calling the microservice from within the RPA application. For some businesses, this may lessen the value of using an RPA solution rather than IT to alleviate the backlog of automation work.

Can Automation Tools From Amazon and Microsoft be Used in Place of RPA?

Cloud service providers, such as Amazon and Microsoft, have a diverse set of services available to developers that can be provisioned and operated through a set of programming interfaces. This makes them very accessible and easy to combine to deliver automated business processes.

Moreover, there are products, such as PowerApps and Flow from Microsoft, that democratize the use of powerful services like natural language processing, image recognition, optical character recognition, and database access through visual modeling. While these tools cannot automate existing user interfaces, they do offer usable alternatives to using RPA to create unattended bots for routine tasks, such as responding to email or searching social media and the web.

What Pricing Model is Most Appropriate?

While SaaS pay-as-you-go might offer better overall pricing, it may not be suitable for working with an organization’s internal applications without expensive connectivity options.

Pricing models vary widely when it comes to RPA products. Most have support for deployment in owned data centers and as SaaS. They also offer licensed runtime capabilities and pay-as-you-go subscriptions.

Organizations need to consider how the pricing model will affect their ability to parallelize certain bots if they want to scale.

Many RPA tools have some limits they place on execution of bots, and often the schedulers enforce serialized execution. Overcoming this limitation usually requires additional licensing.

Businesses may also be constrained by the need for integration with other software applications.

What Should I Worry About Regarding Security?

The potential for leakage of confidential information or enabling systems to be breached more easily increases significantly with the introduction of the citizen developer. To combat this, we advise creating a center of excellence (CoE).

One of the key tasks of a CoE is to ensure bots adhere to governance related to security. Here are some factors a CoE should include in its governance requirements:

No embedded credentials

Proper use of privileged access management (PAM) and vaults

Minimize risk of unauthorized users accessing a system in attended mode

Ensure bot design does not interfere with system operations

Are There Enough Skilled Individuals to Support the Rapid Growth of RPA?

Due to vendors’ investments in publicly available software and education, many system administrators and programmers have been trained to use RPA tools. However, interest in automation is growing rapidly and there will be continued need for those who can manage the RPA environment, build centers of excellence, and drive governance around the use of RPA in the enterprise, as well as those that can help companies evaluate existing processes and incorporate RPA where appropriate.

Conclusion

Introducing RPA into a business is not simply a matter of technical implementation; it is a strategic and business-focused process that centers on an organization’s needs, targets and budgets.

RPA can make a big difference to organizations large and small, but it needs to be aligned with the strategic goals of each business. IT leaders must be sure that its implementation will bring business benefits and ROI that could not have been achieved otherwise with more easily accessible or cheaper tools and processes.

RPA streamlines repetitive tasks, freeing up employee time and increasing productivity—but this can only be achieved if it is implemented for the right processes and if you have trained staff who can successfully manage and incorporate it correctly.

from Gigaom https://gigaom.com/2020/08/24/asked-and-answered/

0 notes